Ripple’s AIOps Pivot: What “Amazon Bedrock for XRPL Operations” Would Really Change (and What It Wouldn’t)

Every bull market has its loud story—price, narratives, slogans. The quieter story is usually the one that survives the cycle: how the plumbing gets rebuilt so the next wave of users doesn’t break it. That’s why the rumor that Ripple is discussing the use of Amazon Bedrock (AWS’s managed foundation-model toolkit) to support operations and scaling of the XRP Ledger (XRPL) is more interesting than it looks at first glance.

If the goal is to use AI to analyze system logs, accelerate incident handling, and compress investigations from “2–3 days” to “2–3 minutes,” then we’re not talking about AI replacing engineers. We’re talking about the next iteration of operational maturity—the moment blockchains start behaving like modern cloud platforms: measurable, auditable, and continuously optimized.

Important note: public details on the Bedrock angle are limited. So instead of treating it as confirmed partnership news, this piece treats it as a strategic direction—an “if Ripple does this, here’s what it implies” analysis. That framing is useful even if the tool choice changes, because the pressure pushing blockchain teams toward AIOps is very real.

The real bottleneck isn’t TPS—it’s “time to certainty” during an incident

Blockchain conversations love throughput. Faster blocks, lower fees, better latency. But production systems rarely fail because they can’t do the average case; they fail because the edge case arrives at 3:17 a.m., triggers a cascade, and suddenly the team is fighting for a clear picture of what’s happening.

In other words, scaling is not just “more capacity.” It’s faster decision-making under uncertainty. And on public networks, uncertainty has a unique flavor: you’re coordinating across validators, node operators, API providers, wallets, and third-party services—often with incomplete information and different incentives.

Traditional cloud teams measure this with boring but powerful metrics: MTTD (mean time to detect), MTTR (mean time to resolve), and “time to mitigation.” Blockchains add another layer: the cost of public confidence. When a popular chain is degraded, users don’t just wait; they reroute liquidity, swap to other networks, and narrate the problem in real time on social platforms. Reliability becomes marketing—whether you like it or not.

Why logs are a perfect use-case for foundation models

Logs are where reality hides. And they are also where humans lose time. A single incident can involve terabytes of traces, metrics, alerts, and configuration changes. The most expensive part is often not the fix; it’s the search for the few lines that matter, plus the mental work of stitching them into a coherent cause-and-effect story.

Foundation models shine in that stitching step: summarization, pattern recognition, “what changed,” and mapping symptoms to known failure modes. If XRPL operations has runbooks, historical post-mortems, alert taxonomies, and change logs, then an AI layer can act like a high-speed librarian—surfacing the right context while the humans keep the steering wheel.

Practically, an “AI for ops” system tends to land in three capabilities:

1) Incident compression: turn thousands of lines into a short narrative (“what happened, when, where”).

2) Hypothesis generation: propose plausible root causes with supporting evidence (“this resembles the mempool backlog incident from August; check X and Y”).

3) Decision support: recommend next actions from runbooks (“roll back version Z; temporarily throttle endpoint Q; re-index component R”).

That’s the promise. But the value is in the boundaries—what the model is not allowed to do. Which brings us to the part most headlines skip.

Hallucinations are not a bug in finance-grade ops—they’re a governance problem

In a consumer chatbot, hallucinations are annoying. In system operations, they are expensive. If a model confidently misattributes a root cause, the team loses time and may deploy a “fix” that worsens the outage. For a public blockchain, that can translate into market damage, reputational harm, and second-order failures across exchanges and DeFi protocols.

So the winning pattern is not “AI runs production.” It’s AI suggests; humans approve; systems verify. The design goal is to tighten the loop between detection and human decision—without turning the model into an authority figure.

In practice, this means:

• Retrieval-first: the model should cite logs, traces, and known runbooks, not invent explanations.

• Confidence gating: low-confidence outputs should be framed as questions, not conclusions.

• Action constraints: the model can draft a mitigation plan, but execution requires explicit human approval and automated checks.

• Auditability: every suggestion should be stored with the evidence it used, so post-mortems can evaluate the AI’s role.

If Ripple is looking at Bedrock specifically, the “managed models + enterprise controls” angle would make sense here—not because centralized tooling is inherently superior, but because production ops demands permissioning, logging, and predictable security posture.

Does using AWS tooling “centralize” XRPL? Not in the way people think

Whenever a blockchain team touches cloud services, the internet reflex is to shout “centralization.” Sometimes that criticism is valid. But it’s often misapplied.

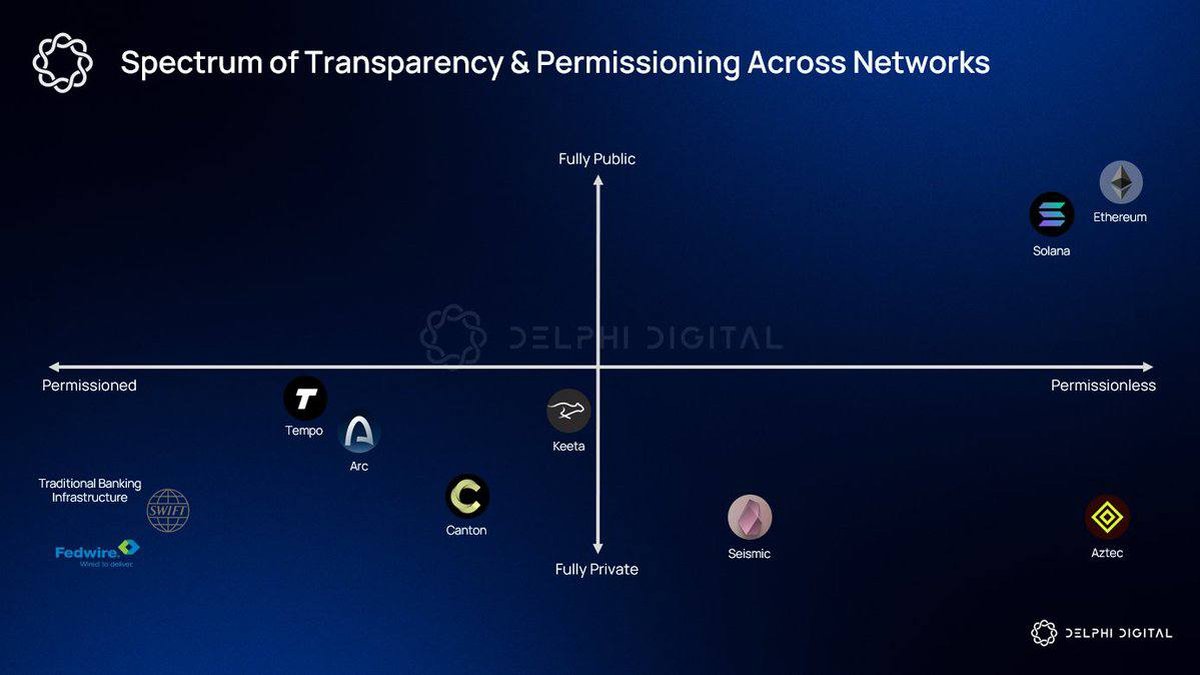

There’s a big difference between consensus centralization (who can validate and influence the chain) and operational centralization (where a company runs its internal monitoring stack). If Ripple uses an AI layer to triage its own logs, that doesn’t automatically change who produces blocks or how the protocol rules work.

The deeper question is subtler: does improved operational capability give Ripple an informational advantage that meaningfully shapes the network? Possibly—better detection and faster responses can influence how quickly software gets patched, how quickly incidents are communicated, and how quickly ecosystem partners coordinate. But that influence already exists today through human expertise. AI simply changes the speed and consistency of that expertise.

In other words, the governance conversation should focus on transparency and process, not the brand name on the tooling.

Why this matters for enterprise adoption: “Reliability” is the hidden compliance feature

For enterprises, the main fear is rarely “blockchain is too slow.” The fear is operational unpredictability: outages, unclear incident ownership, and the inability to explain what happened to auditors or regulators.

An AI-assisted operations stack can help on exactly those pain points. Not by making the chain perfect, but by making the system’s behavior legible. When you can summarize an incident, trace the impact, and document mitigation steps in a consistent format, you’re building the kind of operational narrative that risk teams understand.

This is also where the “2–3 days to minutes” claim becomes meaningful. That isn’t just speed. It’s a shift in organizational confidence: the idea that a network can be both open and professionally operated, closer to a critical infrastructure mindset than a hobbyist experiment.

A labor-market angle: AI doesn’t remove jobs—it changes which jobs count

The broader macro debate about AI and employment often swings between extremes. One camp says AI will slow hiring because big companies can do more with fewer people. Another camp says AI will increase productivity and expand what companies can build.

Both can be true, depending on the time horizon. In blockchain operations, the near-term effect is likely this: teams will still hire, but they’ll prioritize “AI-forward” operators—people who can write playbooks that models can retrieve, design guardrails, and build observability systems that are friendly to both humans and machines.

That’s not just prompt engineering. It’s a new hybrid craft: SRE + data hygiene + security + protocol knowledge. If XRPL wants to scale enterprise usage, that craft becomes a strategic asset.

What this means for XRPL’s roadmap: the “boring layer” becomes a competitive moat

Crypto markets reward novelty, but networks win through compounding trust. If XRPL can reduce incident response time, improve uptime, and standardize operations across partners, it can create a moat that looks boring on Twitter and priceless in procurement meetings.

This also reframes “scaling.” When the chain can be observed and defended like a cloud service, it becomes easier to support more complex workloads: tokenization pilots, enterprise payment corridors, and regulated financial products that require stable operations.

That doesn’t mean price goes up. It means the network becomes more usable. Markets then decide what that usability is worth.

What to watch next (without turning it into a hype checklist)

If Ripple is truly moving toward AI-assisted ops, there are a few concrete signals that matter more than announcements:

• A published case study or technical write-up: not marketing language, but metrics—MTTD/MTTR improvements, false-positive rates, and post-mortem quality.

• Open tooling patterns: adapters for log pipelines, runbook formats, and evidence-linked incident summaries.

• A governance posture: clear statements about what AI can and cannot do in production, and how outputs are audited.

• Ecosystem adoption: whether major XRPL infrastructure providers (wallets, RPC gateways, custodians) align around shared incident language.

Those signals would tell us whether AI is being used as a demo—or as infrastructure.

Conclusion

“Ripple uses Amazon Bedrock for XRPL operations” sounds like a simple integration story. The more interesting interpretation is that public blockchains are entering a phase where operational excellence becomes the product. In that phase, AI isn’t the star; it’s the industrial tool that turns messy reality into faster, safer decisions.

If the implementation is disciplined—retrieval-first, human-approved, auditable—AI can compress incident uncertainty without introducing new systemic risk. If it’s undisciplined, it will add a new failure mode: confident mistakes at machine speed. The difference won’t be decided by the model. It will be decided by governance, process, and engineering culture.

And that’s the real signal: when a blockchain team talks about logs, runbooks, and time-to-resolution, it’s usually because they expect the next wave of users to show up.

Frequently Asked Questions

Does using Amazon Bedrock make XRPL centralized?

Not by itself. Tooling used for internal monitoring does not change the consensus rules. Centralization concerns are more relevant if a single party gains disproportionate control over validation or protocol decisions.

Can AI prevent outages entirely?

No. The realistic goal is to detect issues earlier, reduce investigation time, and standardize responses. Reliability improves through many small wins, not a single “AI switch.”

Why not run an open-source model instead of a managed service?

Some teams do. Managed services can simplify security controls, access management, and compliance requirements. Open-source models can offer more customization and control. The trade-off depends on governance, budget, and risk posture.

What’s the biggest risk of AI in ops?

Over-trust. A model that sounds confident can push teams toward premature conclusions. That’s why evidence-linked outputs and human approval gates are essential.

Disclaimer: This article is for informational and educational purposes only and does not constitute financial, investment, legal, or tax advice.